Standdown points to AI as THE TOOL to create a new SAFETY INTELLIGENCE- how to get there???

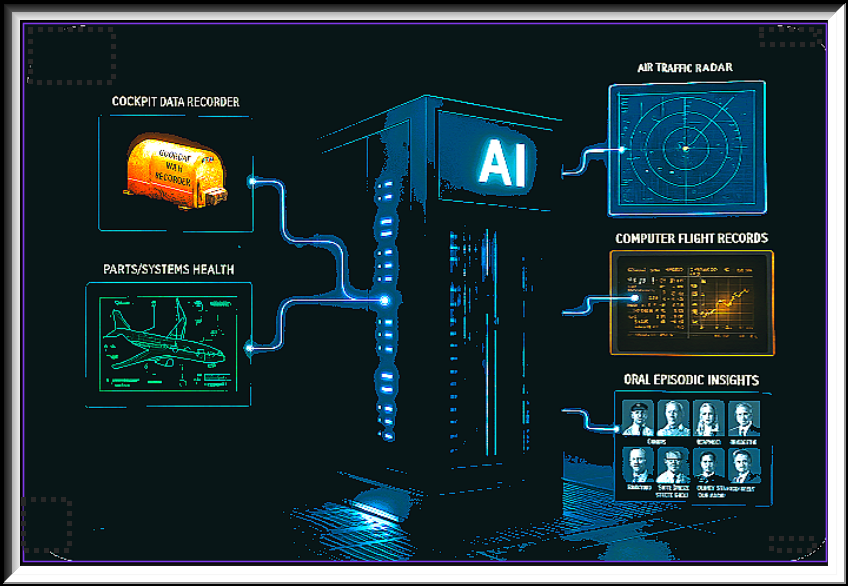

At the recent Bombardier Safety Standdown 2025, CAE’s Senior Product Manager, Clement Cateau, Ing., presented a provocative paper in which he postulated that Artificial Intelligence might be enlisted to enhance the learning value of simulator training. He labeled this new cache of SAFETY RISK information as simulator operational quality assurance. This is all part of what Cateau identified as “SAFETY INTELLIGENCE…it’s about collecting data, transforming raw data into actionable insights for safety management systems His quote suggests the magnitude of his vision of what should be included in such a Meta Data file—immense bits of relevant events.

Can AI digest such a voluminous flow of such learning points. AI[1] (Copilot) says—

In THEORY, AI CAN ANALYZE vast quantities of aviation safety data—but there are PRACTICAL LIMITS.

✅ What AI can handle:

-

-

- Millions to billions of data points across structured and unstructured formats (e.g., sensor logs, pilot reports, maintenance records, ATC transcripts).

- Multimodal inputs: text, time-series, audio, video, and simulation data.

- Real-time ingestion and pattern recognition using scalable cloud infrastructure and parallel processing.

-

⚠️ Practical constraints:

-

-

- Compute and memory limits: Even large models have finite capacity for real-time inference and training.

- Signal-to-noise ratio: More data ≠ better insights. Redundant or low-quality data can obscure emerging risks.

- Model generalization: AI may struggle with rare events or novel failure modes not present in training data.

- Latency and throughput: Real-time safety systems require low-latency processing, which limits batch size and complexity.

-

That practical assessment leads on to define how to prioritize those inputs to feasibly address the vast array of strings of 0s and 1s, or binary digits that the various sources can generate/send. Again, AI provided these categories:

| Source Type | Record Definition Example |

| Flight Data Recorder (FDR) | One timestamped snapshot of aircraft parameters (e.g., altitude, pitch, engine RPM) |

| Pilot Reports (PIREPs) | One narrative or structured report submitted by a pilot |

| Maintenance Logs | One entry detailing inspection, repair, or anomaly |

| ATC Communications | One transmission or transcript segment |

| Simulator Training Data | One training session or maneuver outcome |

| Safety Management System (SMS) | One hazard report, risk assessment, or mitigation action |

Each record may contain:

- Metadata: time, location, aircraft ID, crew ID

- Core data: numerical values, categorical labels, free text

- Contextual tags: weather, airspace, operational phase.

These provide useful terms to structure the initial designing of this mega AI analytical program(s). All of the above list is drawn from established elements of the current SMS universe.

Monsieur Cateau’s challenge is to expand the parameters to the input to his proposed “safety intelligence” tool. His presentation suggests that there’s more that should be considered (i.e. eye-tracking). Recent experience has taught that the scope of SMS relevant details is hard to confine. Here are some rules that AI suggests should assist in identifying what might be considered for inclusion:

- Define new data schemas: What variables are missing? (e.g., pilot eye movement, cockpit ambient noise)

- Ensure interoperability: Align with existing standards like ARINC 429, RTCA DO-178C, or ICAO Annex 13.

- Embed semantic tagging: Use ontologies to describe events, actors, and causal chains.

- Enable edge capture: Use onboard AI to pre-process and compress data before transmission.

- Prioritize explainability: Ensure AI outputs are traceable to input records for audit and safety review.

Cateau’s presentation points to an iterative path to his Safety Intelligence. Decisions are not imminent, but all aviation professionals should be conscious of seemingly mundane events that could be included in this big beautiful new AI created AVITION SAFETY MECHANISM.

Enhancing Aviation Safety with Training, Operational Data

Artificial intelligence tools will use captured information to improve training outcomes

© AIN/Matt Thurber

By Matt Thurber • Editor-in-Chief

November 12, 2025

TRAINING PROVIDERS are capturing data during training events and combining this with operational data to improve pilot training outcomes, CAE SENIOR MANAGER OF PRODUCT MANAGEMENT CLÉMENT CATEAU explained this morning at Bombardier Safety Standdown 2025. His presentation, “Enhance Safety Intelligence with Training Data,” explored how this data is being used to improve training and support safety management systems (SMS).

Just as aircraft operators use flight operational quality assurance to leverage aircraft data for SMS programs, so too are training providers with what is being called simulator operational quality assurance. This is all part of what Cateau identified as “safety intelligence…it’s about collecting data, transforming raw data into actionable insights for safety management systems.”

While Cateau’s presentation delved into various aspects of safety intelligence, one of the more interesting points he made was about the growing use of ARTIFICIAL INTELLIGENCE TOOLS in analyzing the DATA THAT IS CAPTURED.

He said the goal of using training data to enhance safety intelligence is “knowing what happened, why it happened, and providing automatically the recommendations to all different stakeholders and organizations…in an automated way.” This is done manually now and isn’t very systematic, Cateau added. In the future, however, there should be automatic ways to provide feedback based on operations and training data, and draw profiles and other inputs. “But we’re not quite there yet.”

Where AI could help would be in that automation, but Cateau sees AI as an aid, not a replacement, for all the stakeholders involved in training (pilots, instructors, heads of training and safety, OEMs, and regulators). “It’s a powerful technology that we should definitely consider,” he said.

More specifically, he explained, AI can provide helpful information—for example, during a debriefing after a training session because it has access to more data and observations that an instructor might not have seen. When combined with eye-tracking tools, “it will also be beneficial in terms of predictive crew performance modeling,” Cateau said.

“Based on analyzing previous scenarios and previous outcomes, we can one day create models that will…provide the outcome before they run in simulators and provide feedback to the operational world with that, and…maybe update some of the SOPs or training or [aircraft] design.”

AI might also help mitigate operational drift, where pilots increasingly fly outside of specified parameters. Using the concept of unsupervised learning, the safety intelligence data is tapped for useful information. “You feed the machine with the data and you let it find patterns, trends, correlation between inputs,” he said.

“So data mining…if we have the infrastructure to look at that in a very comprehensive way, we can expect some findings on the operational drift. For example, it’s really hard for a human to capture, is there an operational drift or not? It’s VERY HARD TO ANSWER THAT QUESTION, but having a more comprehensive dataset and SOME AI ALGORITHMS WE CAN EXPECT IN THE FUTURE, maybe [there is] some additional capability towards reducing that drift.”

[1] Transparency: my knowledge of AI is limited; so, it’s appropriate and ironic that AI is being used to provide the intelligence for this post.